With the rising popularity of deepfakes “a technique for human image synthesis based on Artificial Intelligence (AI)”, the South Korean giant Samsung has also joined the league with a new AI technology that will allow a neural network to turn a single still image into a convincing video of anyone.

As reported by the Motherboard, researchers at the Samsung AI center in Moscow have made a breakthrough by training a “deep convolutional network” by following a process of showing various videos of talking heads to the network, allowing it to identify and preserve the information regarding certain facial features. With this stored facial information, the network now has the ability to animate a still image of a person.

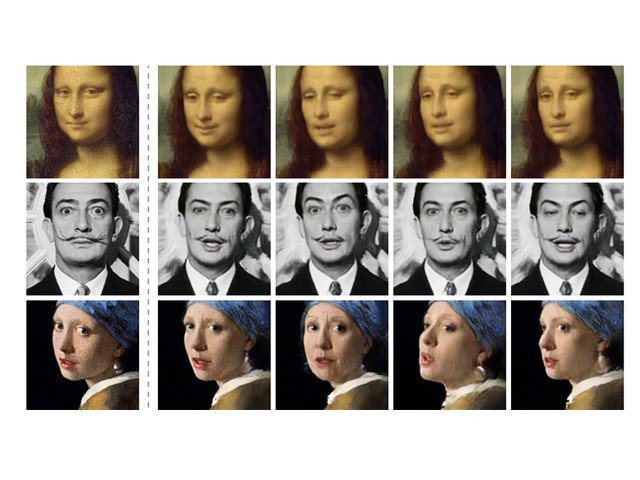

The results of the new AI tech were displayed in a paper with a title, “Few-Shot Adversarial Learning of Realistic Neural Talking Head Models.” Though the deepfake video created through Samsung’s tech is not very convincing, it still has a plus point. Other deepfake videos require multiple pictures of a person attempting to make a video from them, while Samsung’s tech only requires one picture.

See Also: Samsung Galaxy fold gets delayed, again

The company made a test run of this tech on pictures of Mona Lisa, Fyodor Dostoevsky, Salvador Dali, Albert Einstein, Marilyn Monroe and created videos which were convincing enough to be taken as actual footage of a person. As the AI technology is still in its early stages it still requires a lot of improvements and it may take a few years for this technology to develop videos which no one can recognize as fake.

Aside from being an amazing breakthrough, this tech is equally dangerous in the wrong hands. Using this tech anyone would be able to fabricate a video of a person talking by using only a single picture of him/her. This tech with a tool that can imitate voices of people by using snippets of sample radio material or voices, can create a video of anyone saying anything. With improvements in this tech, it would become difficult for people to identify real video from a fake one. Let’s hope the tools needed to differentiate the videos become more advanced as well.