An AI program was assigned to transform aerial images into street maps and then back by researchers from Stanford and Google but what surprised them was that program was deliberately concealing information to increase processing of future tasks. It was the intention of the researchers to accelerate the process of generating imagery for Google Maps from satellite broadcasts and that is where they noticed that things were going surprisingly fast.

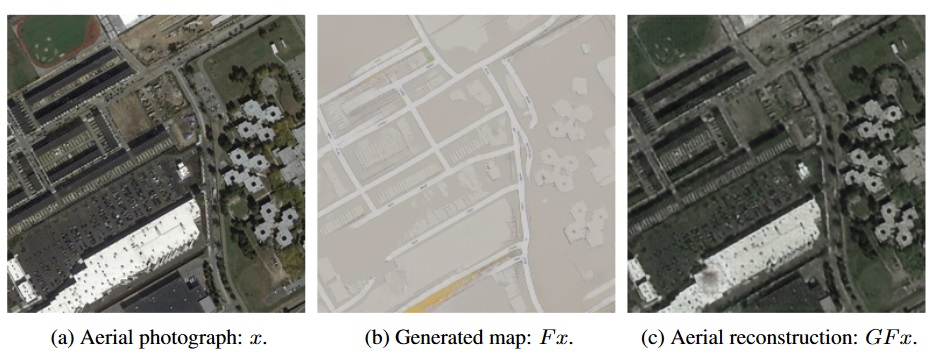

To get better hindsight, compare the images below:

If you take a closer look at the image, you will see that the AI is regenerating the images with more dots than the original picture which shows it is using some existing data or algorithm to smartly recreate the image. The purpose of the AI named CycleGAN was to understand the features of either of the above maps and match them onto each other. The grading criteria, however, wasn’t how fast it was able to do so, rather how much do the images match.

As per the research paper:

We claim that CycleGAN is learning an encoding scheme in which it “hides” information about the

aerial photograph x within the generated map F x. This strategy is not as surprising as it seems at first glance, since it is impossible for a CycleGAN model to learn a perfect one-to-one correspondence between aerial photographs and maps, when a single map can correspond to a vast number of aerial photos, differing for example in rooftop color or tree location.

This is certainly freaky to think that our AI software is getting this much smart. Are you startled by this research? Leave us your thoughts in the comments.