Since the emergence of AI and chatGPT has taken the world by storm. Robots are in terms these days, and tech companies are trying to invest more and more to produce the best humanoid robots. Learning has been a holy grail in robotics for decades.

These systems will need to do more other than follow the given instructions; they need to learn more and more if they want to survive. Accurate robots need to know and adopt as many things as they can.

Learning from videos is an intriguing solution that’s been central for many years. Almost last year, the channels highlighted the WHIRL, a CMU-developed algorithm designed to train robotic systems by watching a recording of a human executing a task.

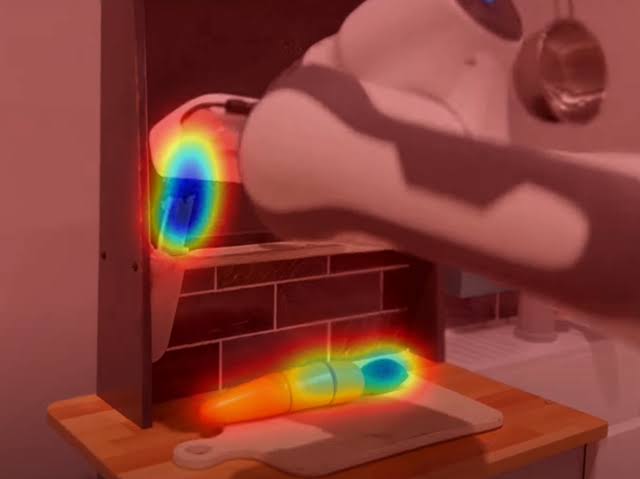

Deepak Pathak, assistant professor of CMU Robotics Institute, is showcasing VRB (Vision-Robotic Bridge), an evolution of WHIRL; the system will use videos of a human to demonstrate the task.

“This work will enable the robots to learn from the vast amount of internet videos available,” as per experts. Engineers have created robots that can learn new skills from videos. A team of engineers from Carnegie Mellon University (CMU) developed a model that enables robots to perform household tasks exactly as humans.

Robots can efficiently perform opening draws and picking up knives after watching a video of the action. The Visual Robotic Bridge (VRB) is a fantastic technique that requires no human oversight and helps you learn new skills in just 25 minutes.

Deepak Pathak stated, “the robot can learn where and how humans interact with different objects through watching videos; with this knowledge, we can train a model that makes two robots for complete similar tasks in varied environments.”

In addition, the VRB technique enables robots to learn and adopt the actions shown in a video, even in a different setting.

The technique works by identifying main contact points, such as picking up the knife and holding the things by understanding the motions to accomplish the target.

“We were able to take robots around campus and do all sorts of tasks, said Shukar Bahl, a Ph.D. student in robotics at CMU.

For example, the researchers showed a video of opening a drawer. The contact point is the handle, and the trajectory is the direction it opens, “after watching several videos of humans opening drawers, the robots can determine how to open any drawer.”

Robots can not perform with the same speed and accuracy as humans, but that doesn’t mean the occasional weirdly built cabinet won’t trouble us. If we are willing to enhance the accuracy, make larger datasets for training. CMU is relying on videos that are already embedded, like Epic Kitchen and Ego4D, the latter of which has almost 4,000 hours of egocentric videos of daily activities from across the world.

In addition, he also said that “robots can use this model to curiously explore the world around them. Instead of just flailing its arms, a robot can be more direct with how it interacts”.

“This work could enable robots to learn from the vast internet and YouTube videos available.”

🤖 Robotics often faces a chicken and egg problem: no web-scale robot data for training (unlike CV or NLP) b/c robots aren't deployed yet & vice-versa.

Introducing VRB: Use large-scale human videos to train a *general-purpose* affordance model to jumpstart any robotics paradigm! pic.twitter.com/csbvsfswuG

— Deepak Pathak (@pathak2206) June 13, 2023

However, the robots chosen for the research process successfully learned 12 new tasks during 200 hours of real-world tests. Besides, all the tasks were interlinked and straightforward, including opening a can and picking up the phone.

Researchers plan to develop the VRB system to allow robots to perform multi-step tasks. The researchers have presented their views in a paper titled ‘Affordances from human videos as a versatile representation for robotics.’ Which has planned to reset this month at the Conference of Vision and pattern recognition in Vancouver, Canada.

Read more: