Selfie is a virus from which everyone is affected and it has developed several forms as it spread around the world. Imagine if your selfie turns up at a Google Search Engine and it adds an auto-caption with the image through its advanced algorithm. “A person with an awkward mouth formation…..” Okay, it might not be that accurate.

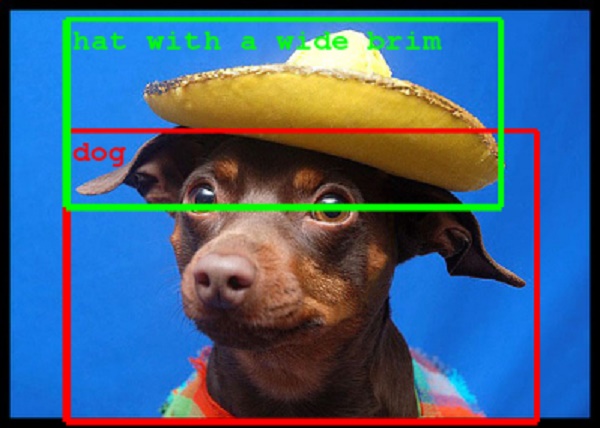

Google has developed a system which can teach itself how to describe a photograph with a very high degree of accuracy. In other words, Google is trying to create a full natural language description of the image as it was mentioned in the recent Google research paper published at its site. Check out the image below to get the idea.

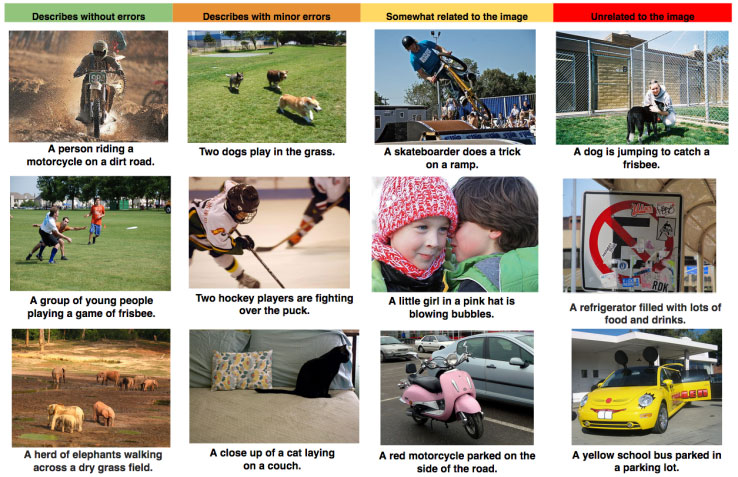

Usually, a typical approach to the problem is to first let the computer vision algorithm scan the image and then use natural language processing to create a description for it. However, Google is taking an entirely different but better approach. They want to merge the recent computer vision and language models into a single jointly trained system which will take an image and produce a human readable sequence of words to describe it. According to Google, the later approach has worked well in machine translation systems but the captioning system works differently using the same approach and it is not perfect.

Bilingual Evaluation Understudy (BLEU) score is generally used to compare the quality of machine translated description with that of a normal human description. It gave computer captioning system a score between 27 and 59 points depending on the dataset whereas a typical score for humans is around 69. It is still a huge step forward but, in near future, it might save you from writing those deeply thought statements with your selfies.